Sanctions, lawsuit removals, and reputational damage are among the professional risks of using public, web-scraped AI

Highlights

- Consumer AI tools create malpractice risks through fictional citations and unreliable legal research.

- Federal judges have imposed thousands in fines for AI-generated fabricated legal citations.

- Professional-grade legal AI tools offer 95% accuracy compared to consumer tools' 60-70% rates.

As law firms rush to adopt AI, many now realize that using consumer-grade tools can turn cost-saving measures into career-ending liabilities. What starts as a simple attempt to streamline research or drafting can quickly escalate into professional sanctions, removal from lawsuits, and reputational damage worth millions.

Most consumer AI tools are trained on web-scraped content from across the internet, treating blog posts and random opinions with the same weight as authoritative legal sources. They lack systematic updates when laws change or cases are overturned, and no legal experts validate the content before it enters their training data.

Without consistent citation control, these tools frequently mischaracterize case law, apply precedents incorrectly, or generate entirely fictional case names and citations. As such, they are not designed to deliver the accuracy, sourcing, and professional oversight that the legal profession demands.

Jump to ↓

Documented cases of consumer AI malpractice

CoCounsel Legal, the professional-grade AI alternative

Maintain accuracy and reliability

White paper

The future of the law firm: No time for bystanders amid AI’s increasing influence

Access white paper ↗Documented cases of consumer AI malpractice

Today, courts are scrutinizing AI-generated legal work, with some federal judges penalizing lawyers who use tools that are prone to fabricating information.

Levidow, Levidow & Oberman

In one of the first judicial rebukes of attorneys using AI, a federal judge in Manhattan fined two New York lawyers $5,000 in June 2023. The penalty was issued for citing cases generated by ChatGPT during a personal injury lawsuit against an airline. The U.S. District Judge found that the lawyers acted in bad faith and made “acts of conscious avoidance and false and misleading statements to the court.”

The judge stated that it is not “inherently improper” for lawyers to use AI as a tool but emphasized that ethical rules require attorneys to act as gatekeepers and ensure their filings are accurate.

Senate Judiciary Committee inquiries

Congress has recently raised concerns about how AI is being used in federal court cases. Senator Chuck Grassley from the Senate Judiciary Committee questioned two federal judges who withdrew their rulings after AI assistance was discovered in their preparation. U.S. District Judges Julien Xavier Neals (New Jersey) and Henry Wingate (Mississippi) admitted that AI platforms — ChatGPT used by Judge Neals’ intern and Perplexity used by Judge Wingate’s law clerk — were involved in drafting decisions that were later retracted.

Although both judges quickly corrected their mistakes, the incidents show that AI hallucinations are real problems, not just theoretical concerns. When federal judges stress their responsibility to maintain accuracy and integrity, the message to legal professionals is clear: consumer AI tools introduce unacceptable risks that can damage our legal system’s credibility.

Monk Law Firm

In November 2024, a Texas federal judge fined lawyer Brandon Monk $2,000 for submitting a court filing with fake cases and AI-generated quotes in a wrongful termination suit against Goodyear Tire & Rubber. Monk was also required to take a generative AI legal course after Goodyear’s counsel couldn’t find several cases he cited against their summary judgment motion.

U.S. District Judge Marcia Crone previously ordered Monk to explain why he should not face sanctions for failing to comply with federal and local court rules, including requirements to verify content generated by technology.

Morgan & Morgan

Lawyers from personal injury firm Morgan & Morgan learned a costly lesson about AI reliability in February 2025, when a federal judge imposed $5,000 in total fines for using fabricated case citations generated by AI. One lawyer received a $3,000 fine and removal from the lawsuit after admitting he used an internal AI program that “hallucinated” the cases he incorporated into a court filing. Another lawyer and local counsel were fined $1,000 each for failing to ensure the accuracy of the AI-assisted work.

In deciding sanctions, U.S. District Judge Kelly Rankin of Wyoming noted that lawyers “have been on notice” about AI’s ability to hallucinate cases. While he acknowledged that the lawyer’s honesty and Morgan & Morgan’s steps to prevent future incidents warranted less severe punishment, his ruling reinforced that professional verification duties remain unchanged in the AI era.

“When done right, AI can be incredibly beneficial for attorneys and the public,” Rankin wrote. “The instant case is simply the latest reminder to not blindly rely on AI platforms’ citations regardless of profession.”

White paper

The pitfalls of consumer-grade tech and the power of professional AI-powered solutions for law firms

View white paper ↗The costs of malpractice

The documented sanctions represent only the tip of the iceberg. Beyond the immediate financial penalties, AI-related malpractice creates consequences that can threaten a firm’s long-term viability.

Reputational damage

Word travels quickly through bar associations, legal publications, and professional networks when a firm faces AI-related sanctions. Potential clients research firms online, and negative coverage about unreliable AI use can overshadow decades of solid legal work.

Operational disruption

When AI-related errors surface, firms must conduct comprehensive reviews of all AI-assisted work. This potentially requires associates to re-verify research and partners to re-examine strategic decisions. Court cases may face delays, client relationships require damage control, and internal processes need complete overhauls.

Client migration

When trust fades, clients move to more dependable law firms. Corporate counsel and repeat clients in particular monitor their outside firms’ risk management practices. They cannot afford to associate with firms that use unreliable technology, especially when competitors offer the same expertise with better risk controls. Losing major clients due to AI-related concerns can cost more than the original malpractice fine.

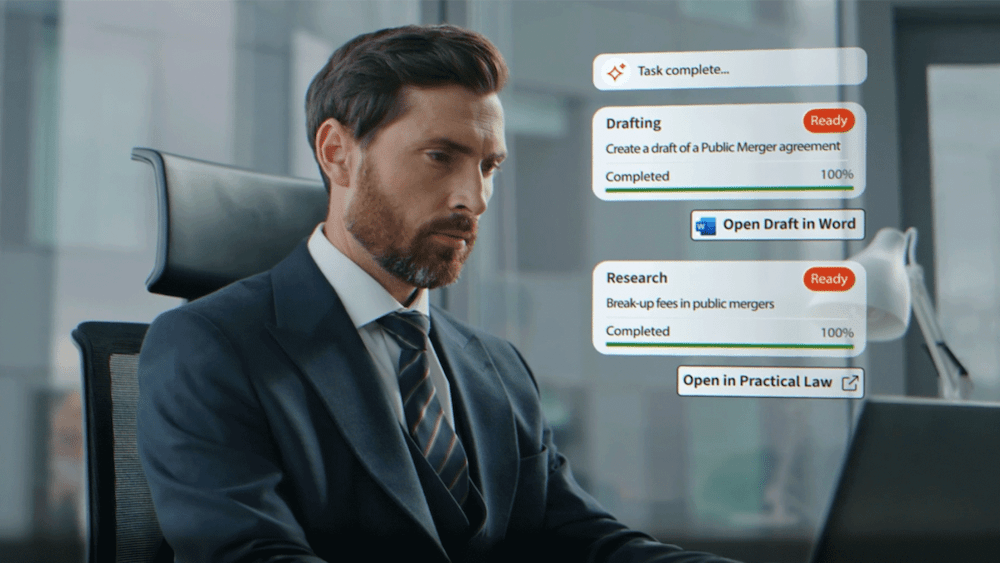

CoCounsel Legal, the professional-grade AI alternative

How can lawyers address the flaws in consumer AI tools? The answer lies in purpose-built legal AI that prioritizes professional standards over convenience. CoCounsel Legal represents a fundamentally different approach to legal AI, designed from the ground up to meet the unique demands of legal practice.

Built on Westlaw and Practical Law resources maintained by over 1,500 attorney editors, CoCounsel Legal draws exclusively from authoritative legal sources that have undergone professional review and validation.

While consumer AI tools typically achieve 60-70% accuracy rates, CoCounsel Legal delivers professional-grade accuracy exceeding 95%. This improvement directly addresses the reliability gap that makes consumer AI unsuitable for professional legal work. Every response includes complete citations and sourcing information, allowing attorneys to trace each answer back to verified sources.

Developed specifically for legal professionals, CoCounsel Legal incorporates enterprise-level security standards and compliance measures that protect sensitive client information. It integrates seamlessly with existing legal workflows — including Microsoft 365 and document management systems — allowing attorneys to enhance their efficiency without compromising their professional obligations.

Maintain accuracy and reliability

Consumer AI tools create liability exposures that far exceed any initial cost savings. When courts emphasize their duty to ensure accuracy and integrity, shouldn’t your AI tools meet the same professional standards?

Discover CoCounsel Legal’s professional-grade capabilities and experience AI built on trusted legal intelligence.