Addressing ethical and security concerns in AI usage, emphasizing transparency, accountability, and responsible governance frameworks.

Jump to ↓

| Ethical and security issues of AI |

| Using AI responsibly |

In our Future of Professionals report, we address the many concerns and challenges that are being raised around the use and application of AI in professional and business capacities. Legal and accounting businesses are faced with figuring out how to use AI ethically and responsibly. Thomson Reuters has been a thought leader in developing principles to guide practices and helping our clients navigate the complexity with AI for decades. As generative artificial intelligence continues to infiltrate our lives personally and professionally, we strive to provide the most up-to-date research and best practices on leveraging these technologies with accountability and transparency.

Ethical and security issues of AI

While many legal and accounting firms are trying to determine their AI practices and governance, there are a number of issues that need to be addressed. Older generations are being challenged to adapt to the changes the incorporation of AI into professional services is having and may offer resistance from a fear that AI will push ethics out the window. While younger generations are looking at the possibilities generative AI opens. However, there seems to be little debate over the fact that businesses that embrace AI will be the ones to get ahead.

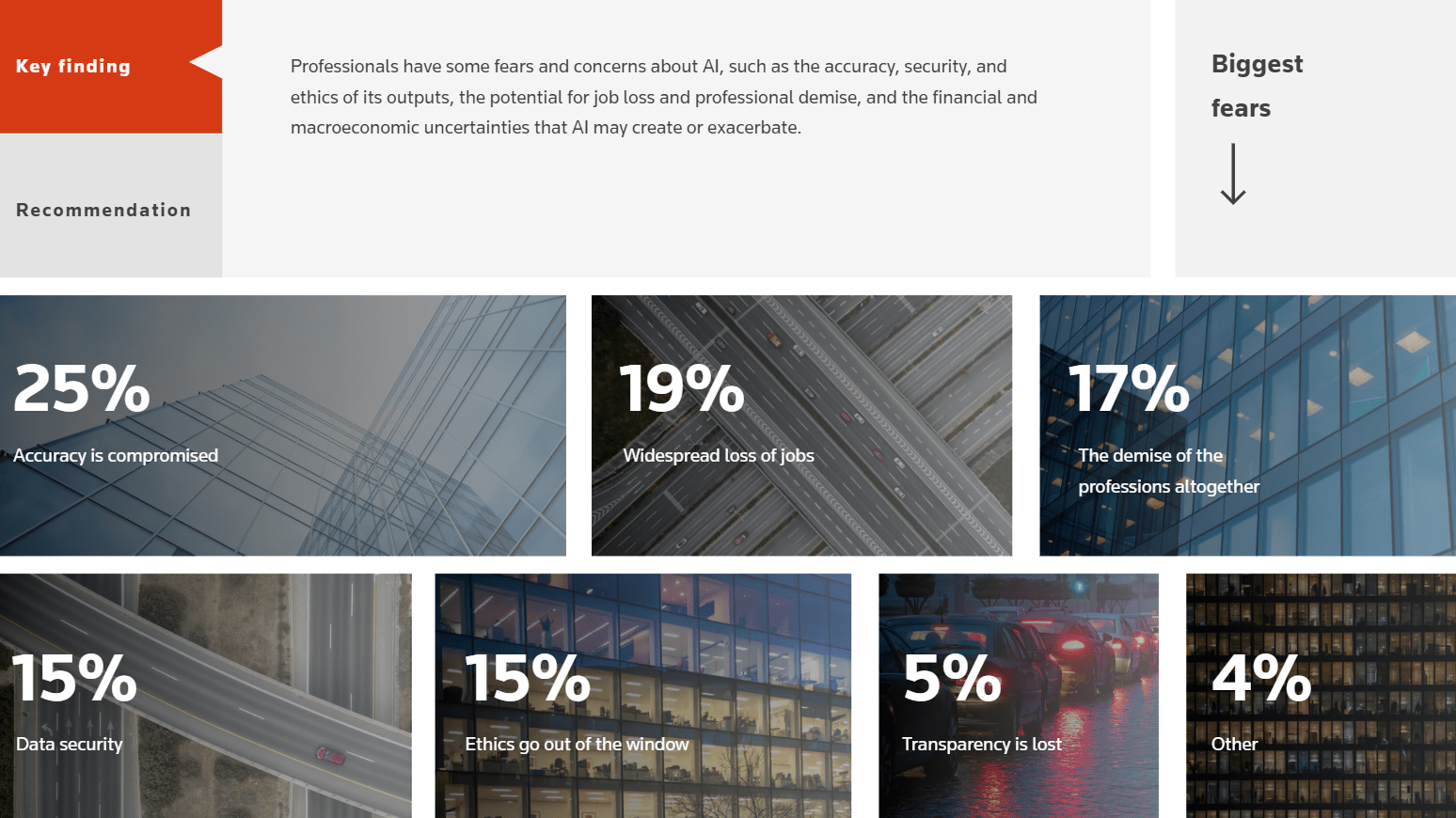

Across many industries, 15% of professionals reported data security and ethics as their biggest fear, with a lack of transparency and accountability close behind. The appeal of AI is that it can assist with numerous repetitive, time-consuming activities and tasks within the operations of a professional firm or department. It has also been found to improve mental health by relieving anxiety, isolation, and burnout by creating more tool-based capacity for this type of work increasing the amount of time professionals have to nurture client relationships and grow their client base.

As much as AI can help businesses and departments do beneficial work, there are ethical considerations to take into account. AI can help fraudsters conduct their activities more efficiently and accurately. It is also important to always remember that AI generates responses based on algorithms created by humans and information provided by humans. As such, humans should be held accountable for verifying and fact-checking what AI generates.

The eagerness to leverage AI has created some situations that have highlighted the necessity of consent and accountability, including cases where AI has “hallucinated” an answer – meaning it improvised a response based on the information it had – which led to legal and ethical consequences. Since AI does not provide any data on the source of the responses, ensuring a human validation process should be a part of AI policies. AI is also subject to bias risk mitigation, in which the data used to train a model may result in errors favoring one outcome over another. This can result in a lack of trust and fairness.

|

Using AI responsibly

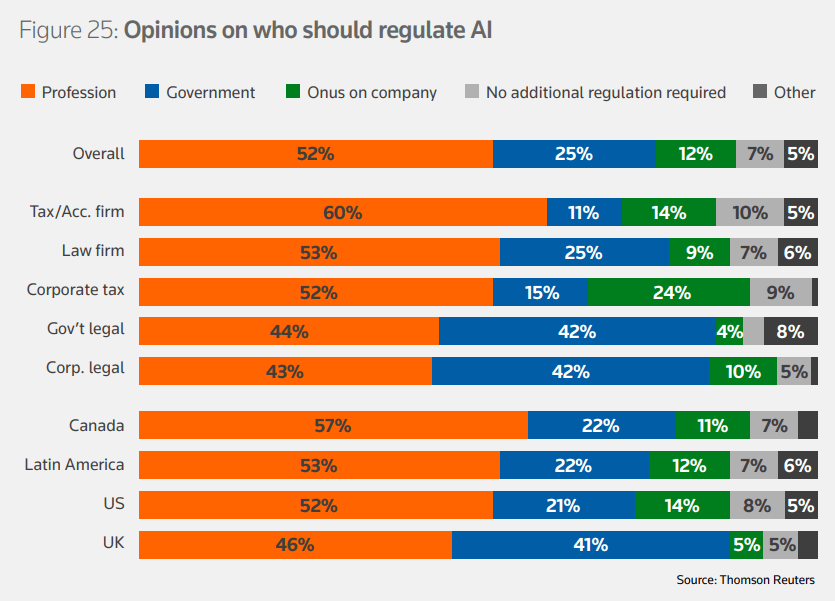

The United States has issued an Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence that establishes principles and guidelines for federal agencies to follow when implementing an AI system. It also offers a framework for working with various stakeholders. The Future of Professionals report shows that 52% of professionals believe that regulations governing the professional ethics of AI are a necessity, and 25% believe that governments should be designing and overseeing professional ethics regulations.

Key areas of AI governance frameworks should cover encryption and authentication protocols, frequent auditing and testing procedures, traceability, educating employees on the proper and ethical use of AI, and best practices for securing and protecting confidential data. Ultimately, businesses and departments should be able to understand how an algorithm arrives at its output and where the data comes from. Because it pulls data from large data sets, those data sets should be legitimate and qualified to get the most accurate and relevant output.

If AI is to be incorporated into your workflow, a human-centric design should be at the core of the initiative. Transparency and accountability are all crucial to maintaining trust with clients, users, and employees. Biases should be avoided, fairness should be promoted, and security should be the top priority.

Given the private and confidential nature of the information that professionals are working with, we believe that developing internal AI regulations and governance at the firm level is critical to establishing trust, accountability, and transparency around the consent and privacy of client information. It’s important to understand the principles behind AI ethics and make sure your organization has a framework that is tailored to the business and your customers.

Confidence in your adoption of AI for all stakeholders lies in professionals’ ability to provide high levels of service, access to information on the use of AI, and foster a culture of risk mitigation and awareness. For more information, download the Future of Professionals report.

|