How AI laws are evolving globally and in the US — from the EU AI Act to state-level reforms — plus how AI is reshaping the legal profession

Reviewed by Amanda OKeefe, Director, Global Data Privacy & Cybersecurity, Thomson Reuters

Highlights

- As AI adoption grows, legal professionals must ensure safeguards are in place and balance innovation with public safety

- The EU AI Act classifies AI systems into four risk levels, with higher-risk systems requiring stringent compliance measures and prohibiting practices that pose unacceptable risks

- US federal agencies like the SEC, FTC, and FCC have issued rules and guidance to regulate specific AI applications

Artificial intelligence (AI) can enhance productivity in various aspects of life and alter the way lawyers work. With the influx of law firms and businesses using AI technologies, ethical challenges and risks arise.

To manage these risks and challenges and to pursue a balance between public safety and innovation, several countries, including the US, have introduced AI laws and regulations.

To stay current on AI legal developments, practitioners must monitor laws, regulations, and industry standards across practice areas and around the globe. Laws that affect legal practice or AI use will not always include the words “artificial intelligence” in the title.

Sr. Specialist Legal Editor, Thomson Reuters

As law firms and businesses adopt new AI systems and uses, the legal framework must evolve, and professionals must ensure that safeguards are in place.

Below is a broad overview of the latest AI laws and regulations internationally and in the US.

Jump to ↓

How AI is affecting legal practice

CoCounsel Legal

Bringing together AI, trusted content, and expert insights for evolving legal frameworks

Go professional-grade AI ↗International laws

In June 2024, the European Union (EU) adopted the EU Artificial Intelligence Act, Regulation ((EU) 2024/1689) (EU AI Act), as the first comprehensive legal framework to address AI development, supply, and use.

This legislation aims to support AI innovation while ensuring AI usage is safe, environmentally-friendly, transparent, non-discriminatory, and includes human oversight to prevent harmful outcomes.

The EU AI Act defines roles and obligations for covered organizations throughout the AI supply chain and includes extra-territorial application for organizations based outside the EU.

For more on the roles and obligations of operators in the AI supply chain, see Practice Note, EU AI Act compliance: introduction: Operators in the AI value chain.

For more on the EU AI Act’s extraterritorial reach, see EU AI Act Compliance for Non-EU Practitioners Checklist.

The EU AI Act establishes risk-based AI classifications, with different requirements applying to the various risk levels. The four Risk classifications include:

- Minimal or no risk systems

- Limited risk AI systems

- High-risk AI systems

- Unacceptable or prohibited AI practices

A higher risk classification may require an organization to comply with cumbersome obligations, or it may prohibit the organization from offering the AI system in the EU.

Prohibited AI practices pose an unacceptable risk

The EU AI Act bans AI systems that impose an unacceptable risk, including practices that are deemed incompatible with EU fundamental rights and values. Prohibited AI practices include, for example, practices that:

- Exploit a person’s or group’s vulnerabilities such as age, disability, or economic situation

- Enable emotion recognition in the workplace or educational institutions

- Indiscriminately web-scrape to create or enhance facial recognition databases

High-risk AI has strict regulations

High-risk AI systems affect safety or fundamental rights, and include:

- AI in regulated products like toys, cars, aviation, medical devices, and lifts

- AI used in areas including biometrics, education, employment, critical infrastructure, and legal assistance

High-risk AI systems must meet technical compliance requirements. Covered organizations must meet additional requirements based on their role in the AI system supply chain.

For more on EU AI Act risk classifications, see Practice Note, EU AI Act: AI system risk classification.

Generative AI and transparency

Generative AI (GenAI) solutions like ChatGPT may be considered general purpose AI (GPAI) models or systems that must comply with specific obligations (see Practice Note, EU AI Act: General purpose AI models). In addition, GenAI solutions must comply with copyright laws, and meet certain transparency requirements.

For example, developers of AI systems that interact with an individual must ensure that:

- The AI system informs the individual that it is interacting within an AI system

- The transparency information is clear and easily identifiable

Similarly, AI systems that generate synthetic content must:

- Label the output as artificially generated

- Ensure the transparency information is clear and easily identifiable

- Ensure the technical solutions for labelling are effective, interoperable, robust, and reliable

For more information about the EU AI Act’s transparency obligations, see EU AI Act transparency requirements: checklist. U.S. laws.

Practical Law Toolkit

AI Toolkit for use in the US, UK, EU, China, Canada, and Australia

Access with free trial ↗Federal AI legal developments

The US does not have a comprehensive federal law regulation of AI.

On January 23, 2025, President Trump signed the executive order Removing Barriers to American Leadership in Artificial Intelligence. This revoked former President Biden’s October, 2023 Executive Order 14110, which mandated safety testing and reporting, the development of key standards, and aimed to prevent discriminatory practices and protect civil rights.

For more on AI-related White House policy, see the Trump Administration Data Privacy & Cybersecurity Developments Tracker: Artificial Intelligence.

Several US federal agencies have instituted rules or regulatory guidance. For example:

- Securities and Exchange Commission (SEC) — The SEC established the Cyber and Emerging Technologies Unit (CETU) to target AI-related fraud.

- Federal Trade Commission (FTC) — The FTC instituted a rule banning fake reviews, which includes AI-generated reviews.

- Federal Communications Commission (FCC) — The FCC instituted regulations to prevent robocalls by AI-generated voices.

For more on AI-related federal regulations including the AI action plan to implement the administration’s “global AI dominance” policy on July 23, 2025, see Developments in US AI Law and Regulation: 2025 Tracker.

State AI legal developments

Many US state laws impact AI, including laws that address data protection, intellectual property, employment, healthcare, algorithmic decision making, or GenAI. A few states have enacted specific AI legislation. Two of the most notable US state AI laws are:

- Colorado’s Consumer Protections in Interactions with Artificial Intelligence Systems (SB 23-205). In May 2024, Colorado became the first US state to pass comprehensive AI legislation. The law takes a risk-based approach similar to the EU AI Act and requires organizations developing or deploying high-risk AI systems to:

- Develop an AI risk management program.

- Avoid algorithmic discrimination in consequential decisions.

- Meet rigorous reporting, disclosure, and compliance obligations.

- Utah’s Artificial Intelligence Consumer Protection Amendments and AI Policy Act. In 2024 and 2025, Utah enacted laws governing GenAI use in consumer transactions and regulated services.

Colorado’s law takes effect on February 1, 2026. For more information, see Legal Update, Colorado Enacts Comprehensive AI Legislation Targeting Discrimination.

For more on Utah’s AI laws, see Utah AI Consumer Protection Compliance and AI Policy Act Checklist.

Artificial Intelligence and law guide

A comprehensive overview of AI and law in this one-stop guide.

View blog ↗How AI is affecting legal practice

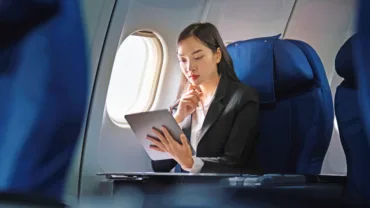

AI is reshaping the legal profession and its impact on legal work and clients. AI automation in document review, legal research, and contract analysis is expected to save lawyers at least four hours per week. This extra time can help lawyers focus on strategy and increase their billable hours.

Clients benefit from a quicker response time, reduction in human error, and a sharper lens for case analytics. Lawyers benefit from AI’s ability to offer more personalized discussions and recommendations.

However, AI use does come with concerns about the ethical use and the reliability of generated information. AI always requires human supervision, especially when giving legal advice and representing clients in court.

As AI continues to evolve, the legal profession will likely need to develop additional roles like AI-specialist professionals, IT and cybersecurity specialists, AI implementation managers, and AI-specialist trainers.

According to the Thomson Reuters Institute 2025 Generative AI in Professional Services Report, at least 95% of attorneys believe GenAI will be integrated into their workflows in five years and will help them with these key tasks:

- Document review — AI can summarize thousands of documents. These summaries can quickly help determine if the information is relevant to a specific case.

- Legal research — AI can efficiently wade through statutes, case law, and legislation. AI can also provide the correct citations. This task alone could take humans thousands of hours.

- Drafting memos and contracts — AI can produce a memorandum much faster than traditional methods. Using an AI tool can automate contract drafting.

Ultimately, AI will impact how lawyers help their clients. The Thomson Reuters Institute report found that more than 59% of lawyers believe AI will help them process volumes of legal data, over 40% think it could speed up response times, and 30% of lawyers think AI can reduce human error.

As AI continues to evolve, legal professionals must balance innovation with ethics and regulation.

To keep up with AI legal developments, see the AI Toolkit (US).

Future of Professionals Report 2025

Survey of 2,275 professionals and C-level corporate executives from over 50 countries

View report ↗