Improve your AI output by including the right information and avoiding common pitfalls

We’ve previously provided an overview of how large language models (LLMs) work, the benefits and limitations of these models, and why the quality of their output depends on the quality of the prompts you give them. We also explained why evaluating and deciding how to apply LLM-powered tools requires a degree of AI literacy, or at least a fundamental understanding of how LLMs work.

Below, we offer tips for getting the most from LLMs by providing sufficient context with prompts.

Jump to ↓

A formula for well-structured prompts

More techniques for better prompts

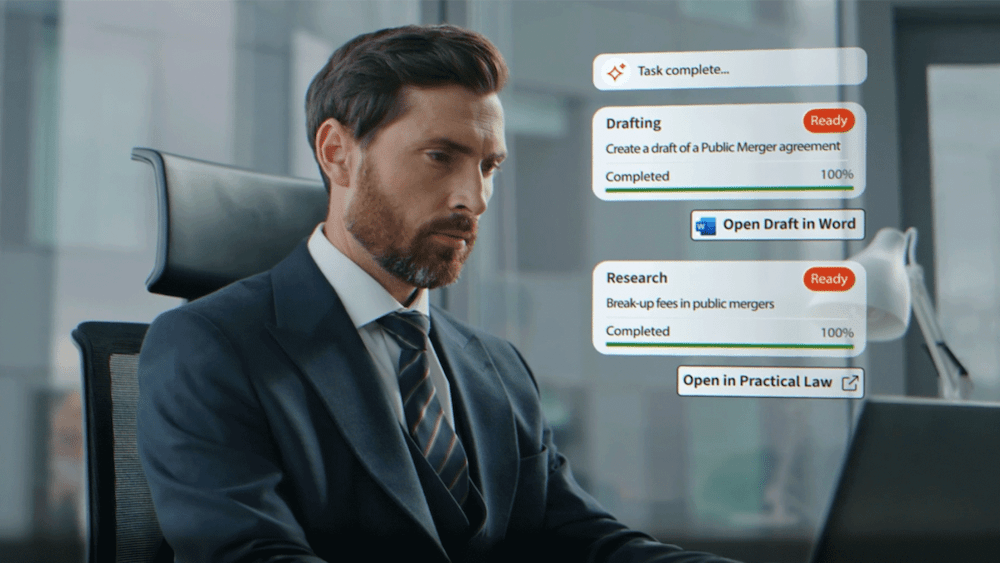

CoCounsel Legal

AI lawyers swear by thanks to tested prompt libraries grounded in trusted content

Go professional-grade AI ↗Context is critical

LLMs like GPT-4 are trained on vast amounts of data and can handle a wide range of tasks across topics, but they still have limitations. While LLMs are highly intuitive and intelligent — for instance, they can detect sarcasm and subtleties in tone — they’re limited to the data on which they’re trained. They also lack humans’ abstract reasoning abilities and can struggle to make accurate assumptions if they’re given insufficient information.

Ultimately, LLMs are just predictive models that mimic human logic through sophisticated pattern recognition. If your prompts are unclear, the AI might not understand your intent.

Adding context provides the missing information a LLM wasn’t trained on and closes reasoning gaps. Context — which, as it relates to AI, can be defined as information that influences the understanding and responses of AI —is key to writing good prompts. A lack of context leaves room for ambiguity, which can lead to erroneous conclusions and unhelpful, or even wrong, answers.

Specific-use AI that’s specialized in a particular area of knowledge, like CoCounsel, is less vulnerable to mistaking your intent due to vague prompts. That’s because it pulls from a specific knowledge base, such as a database of current case law, statutes, and regulations, as well as the back-end prompting that guides your request. But specific-use AI still benefits from sufficient contextual background in your prompt, which helps the AI interpret your requests more accurately, leading to better output.

To ensure sufficient context in prompts to legal AI, include the type of case you’re working on such as personal injury or employment, basic facts about the case, or the types of documents you want to have reviewed like contracts and discovery. You should also consider whether specific dates or time frames are critical to understanding your inquiry, as well as the type of output you’re looking for — a long summary, a brief paragraph, or a certain kind of analysis.

By including these contextual details in a prompt, you’re more likely to get tailored, high-quality results that are helpful and answer your inquiry, the first time around.

When you’re just looking for basic information, you don’t need to provide as much context. For example, if you want a general overview of a legal document or case, a simple prompt should suffice. This is also the case when you’re seeking a high-level explanation of the law in a jurisdiction or conducting general legal research.

When you’re dealing with complex or specialized information — such as complex legal statutes and acronyms, or niche terms in a particular practice area — it’s important to give the AI more detail. Additional context is critical when what you intend might not be immediately clear, or when you’re seeking deeper reasoning from the AI. By adding context, you’ll help the AI fully understand what you’re asking for, leading to a more accurate response.

A formula for well-structured prompts

A straightforward formula for well-structured prompts is:

Intent + Context + Instruction

Intent

Start with a clear expression of the intent behind your query. This sets the stage for the type of information or answer you’re seeking.

Context

Then, provide context to anchor the AI’s response in a relevant frame of reference. Include specific conditions or background terms that might help the AI better understand the case or scenario.

Instruction

Finally, add the instruction, the actionable part of the prompt where you tell the AI what task you want it to perform.

As an example, you might write “I’m seeking to discredit an expert witness” for your intent. For context, you can add the type of case you’re working on and the document you’re asking the AI to review: “The document contains all prior testimony of the expert I’m seeking to discredit in a medical malpractice case.” Your instruction could be: “Does the document contain any explicit or implied contradiction inconsistent with the expert’s prior testimony?”

Beyond the formula: More techniques for better prompts

Assigning a persona — such as a lawyer in a particular practice area — can be helpful in narrowing the scope of your prompt. For example: “You are an attorney reviewing discovery in a product liability case. Analyze the documents provided and identify any potential liability and the parties involved.” This context assigns a persona to the AI, instructing it to provide an answer from a specific perspective.

Declaring a condition, precedent, or presupposition is another technique to ensure the AI’s analysis is contextually appropriate and relevant to your needs. Setting a specific condition that must be acknowledged or fulfilled before the AI executes an instruction can save you time by reducing irrelevant results. For example: “If the document discusses employment agreements, summarize the sections related to termination and severance pay.” Writing the prompt this way tells the AI you only want information related to employment agreements.

Prompt reinforcement is a simple yet powerful way to enhance the clarity of your prompts, especially when adherence to instructions is paramount. Simply repeating the content of the prompt—through reiteration or specifying areas on which to focus—serves as reinforcement and helps obtain more precise results.

Perhaps you want the AI to respond in a particular format. You can ensure the AI does so by establishing example patterns. Let’s say you want CoCounsel to list each and every medical diagnosis indicated in the document alongside each respective date of diagnosis, and you want the date and response in a certain format.

You can write an example with placeholder text, such as [mm/dd/yyyy]: [description of diagnosis], which the AI will follow when generating its response. Using indicators such as brackets also helps delineate the placeholder text you want the AI to replace. The exception is parentheses, which may confuse the AI because they are commonplace and frequently used, and should be avoided.

Lastly, LLM responses can always be incrementally improved, so you should iterate on and refine your prompt to get the best results possible. And a good prompt can always be refined with another prompt. You can even instruct the LLM to help improve your prompt.

Common prompting traps

Just as important as adding the right information to your prompts is avoiding pitfalls.

Too much and immaterial information

Adding too much information or data that’s immaterial to your instruction can influence or confuse the AI. Unlike a human, an AI cannot make a distinction between irrelevant and relevant information and will consider all the information you give it when “thinking.”

Primacy and recency bias

Be aware of the “lost middle” bias, or primacy and recency bias. An LLM is more likely to forget or fail to consider information contained in the middle of a prompt than that located in the beginning or end. Keep the most important information, the instruction to the AI, at the start or end of the prompt.

Majority label bias

Majority label bias refers to an LLM’s tendency to place more consideration or weight on words or concepts that appear more frequently in a prompt. Repetition signals emphasis to the AI, and while it can be used to your advantage with reinforcement prompting, it can unintentionally result in less on-point responses.

CoCounsel

Trusted content, expert insights, and AI solutions with ISO 42001 certification — the global standard for risk management, data governance, and responsible AI practices

Meet your AI assistant ↗Avoid lumping topics together

Most LLMs offer a way to separate different tasks, topics, or projects. For instance, CoCounsel segregates matters into individual chat environments with independent context windows. Begin a new chat when you want to work on a new topic.

Remember the AI’s limitations

When they’re not considered, the AI could lead you down the wrong path. Essentially, respecting the AI’s weaknesses will ensure better performance and help you avoid wasted time and frustration. Math, counting, and sorting are difficult tasks for an LLM, because they’re predictive models — they don’t perform calculations.

Assumptions

And don’t assume the AI will make assumptions. Consider what the AI knows and what it can access beyond your prompt, as well as what has been recorded and lost in the context window.

More resources

Generally, it’s good to be very specific about what you want from the AI. Ambiguity can confuse LLMs, so make sure to avoid generic or vague references. And specify not only what you want, but how you want the AI to respond.

To get the most from AI, it’s important to provide sufficient context when you input prompts, which will help tailor and refine your results, and to be aware of pitfalls that may muddy intent or produce irrelevant or incorrect answers.

And here are a few more resources our team has found quite valuable for improving prompting skills:

- 5 prompt engineering techniques for legal work

- Lost in the Middle: How Language Models Use Long Contexts

- The basics of generative AI prompts for legal departments

AI news and insights

Industry-leading insights, updates, and all things AI in the legal profession

Join community ↗