Jump to ↓

| Regulating AI |

| Navigating the challenges of using AI |

| Ethical cases of using AI |

|

It is a transformational period for businesses as advancements in technology, like artificial intelligence (AI), increasingly impact every industry, reshaping how corporations operate, approach staffing, and serve customers. For firms and corporate departments within the legal and risk industry, the ethical and regulatory issues of using AI are quickly taking center stage.

To help the industry teams peel back the layers and better navigate when to use AI, when to not use AI, regulatory compliance issues, and more, the Thomson Reuters Future of Professionals report surveyed more than 1,200 professionals employed in legal, risk, tax and accounting, compliance, and global trade fields.

Regulating AI

Despite the excitement around using AI in business and the benefits to be gained, the focus on regulating AI is shifting into higher gear as is the call for businesses to implement usage, privacy, and communication policies. If not properly managed, many professionals worry that potential damages stemming from AI could be significant.

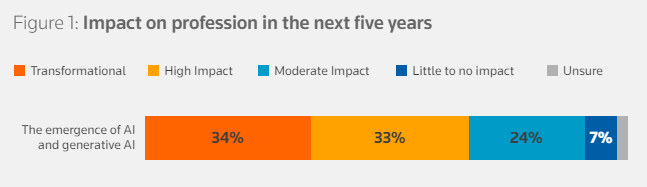

There’s no doubt that increased adoption of AI in business has sparked excitement. In fact, according to the Future of Professionals report, more than two-thirds of professionals surveyed (67%) said they expect the emergence of AI and Gen AI to have either a transformational or high-impact change to their profession over the next five years.

Law firms, for instance, are optimistic about the productivity gains of AI. More specifically, the use of AI in conducting large-scale data analysis and performing non-billable administrative work with greater accuracy. Having the ability to complete such tasks faster and better gives legal professionals more time to focus on more billable work. And firms may be able to recapture revenue lost in write-offs due to inefficiencies within the practice. Given such benefits, many firms and departments are already leveraging AI.

However, the need for regulation to ensure clarity, trust, and mitigate risk has not gone unnoticed. According to the report, the vast majority (93%) of professionals surveyed said they recognize the need for regulation. Among the top concerns: a lack of trust and unease about the accuracy of AI. This is especially true in the context of using the AI output as advice without a human checking for its accuracy.

The call for firms and in-house departments to create and implement usage, privacy, and communication policies is ringing louder. Furthermore, more than half (53%) of law firms and 43% of corporate legal departments said they believe that regulations to govern the professional ethics of AI are necessary at the industry level.

While still in the early stages, the pace of regulatory activity is quickly gaining momentum.

One such example is President Joe Biden’s executive order, issued on October 30, 2023. The “Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence” aims to strike a balance between the benefits of AI and the potential risks and outlines key principles and guidelines for the development and use of AI within the United States.

For industry professionals, the executive order presents both opportunities and challenges. The need to advise and represent clients on AI-related legal issues, such as ethics and cost of compliance, will present the sector with additional growth opportunities. However, the executive order impacts the way the legal sector operates and delivers legal services. Legal professionals will need to adopt and use AI systems in their own work, such as research and analysis.

This is just one of several regulatory efforts underway at the federal, state, and international levels. Additional developments include, but are not limited to:

- The proposed American Data Protection and Privacy Act at the federal level.

- Legislation that has been enacted in nearly a dozen U.S. states, as well legislation that is pending in almost a dozen additional states.

- The European Union’s proposed EU AI Act, which aims to provide a regulatory framework for global AI governance.

Navigating the challenges of using AI

Properly navigating when and how to leverage AI is one of the challenges facing risk professionals, especially since AI regulations are still emerging and reshaping the landscape.

As noted earlier, many risk professionals believe AI can drive productivity and internal efficiencies. That’s not to say, however, that concerns do not exist, especially as it relates to accuracy and data security.

It likely comes as little surprise to learn that 66% of professionals believe AI will also bring new challenges, according to the Thomson Reuters report. One of the greatest fears: lack of accuracy that could result from queries or proactive prompts of suggestions to existing AI chat tools. More specifically, 25% of respondents said their biggest fear was compromised accuracy, and 15% said data security.

Therefore, human intervention is critical. Humans must remain involved in the process to check for accuracy, rather than taking the AI output at face value. This is especially true when dealing with sensitive or high-stakes matters, and using AI output as advice.

“I believe AI may make people less inclined to be engaged and more reliant on systems that are (currently) imperfect,” cautioned one survey respondent. “This will require regulation and constant technological change to keep up with risk factors related to AI.”

Ethical cases of using AI

Will ethics go “out the window” as AI becomes increasingly embedded into the fabric of the business community? Some fear that may be the case. Therefore, it is important that firms and corporate departments remain focused on the ethical concerns surrounding AI.

According to the Thomson Reuters report, one of the top five fears is that AI will “push ethics out of the window.”

|

It is important for professionals to remember that AI is usually best when used to jumpstart a process or reduce the time spent on mundane tasks like research. AI should not be relied upon to provide an accurate answer or conclusion. To further elaborate, consider the following use cases:

Jumpstart research

Use AI, for example, for background information, or to stay up to date on legislative developments and precedents. It can provide professionals a time-saving jumpstart on research by reducing the time spent shifting through and summarizing content. For corporate departments, such insights will help in-house professionals make more informed, strategic decisions for their organization.

Furthermore, one survey respondent said AI will help “speed up research” and decision-making as “natural language queries will replace complex reports.”

Speed up document review

As one survey respondent explained, “Document analysis tasks that are currently done manually can be largely automated, and made much quicker, leading to quicker response times.”

Enhance data synthesis and analysis

Industry professionals spend a lot of time creating, reviewing and sending various documents forms. AI tools backed by expert human oversight can help professionals sort files quickly and seamlessly, improve accuracy, and mitigate risk.

Speed up client communication

As explained by one survey respondent, “AI can help significantly by doing a lot of leg-work up front with a professional fine tuning and adding the experienced advice a client needs. It can all be done faster so the client expectations of communication are met.”

Navigating the ethical and regulatory issues of using AI isn’t always easy, especially as the technology and regulatory landscape continues to evolve. To learn more, explore the Future of Professionals report.

|